- Apr 13, 2012

- 219

- 181

- Awards

- 6

- First Name

- George

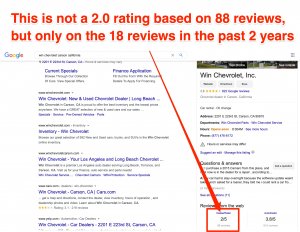

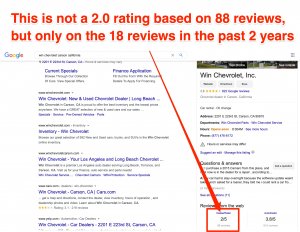

When you Google your dealership name, what 3rd parties appear in the search results? On your Google My Business page, what "Reviews from the web" appear? For many dealers DealerRater appears with their dealer listing. They use schema, along with their large amount of reviews to rank on page 1 for many dealers, and appear as a 3rd party review on their GMB pages. However, the review average is not based on the stated number of reviews, and could be misleading to consumers.

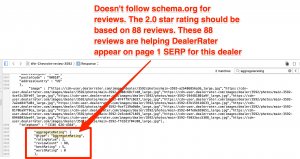

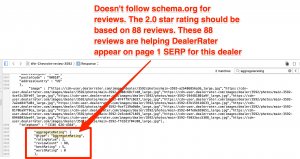

In the attached screenshot, Win Chevrolet in Carson California has a 2.0 stars with DR, but this is only based on the 18 reviews over the last 2 years. To be accurate, I feel the average should be based on 88 reviews, since that is the large number of reviews they are using to score well with schema (image attached also).

The challenge becomes, a dealer works hard to build up a 4-5 star rating, but then if they don't work to drive new DR reviews, their average could dramatically fall. I think dealers should be sheltered from a few recent low reviews by having their large number averages help them. Shouldn't review sites either calculate average on the aggregate, or tell schema that average is based on a lower number of reviews? What do you think?

In the attached screenshot, Win Chevrolet in Carson California has a 2.0 stars with DR, but this is only based on the 18 reviews over the last 2 years. To be accurate, I feel the average should be based on 88 reviews, since that is the large number of reviews they are using to score well with schema (image attached also).

The challenge becomes, a dealer works hard to build up a 4-5 star rating, but then if they don't work to drive new DR reviews, their average could dramatically fall. I think dealers should be sheltered from a few recent low reviews by having their large number averages help them. Shouldn't review sites either calculate average on the aggregate, or tell schema that average is based on a lower number of reviews? What do you think?